HealthJoy’s Spring 2025 Product Updates

2025 is off to an incredible start at HealthJoy, with a series of exciting product updates that are already making an impact!

Connected Navigation Platform

Guiding to high-value care

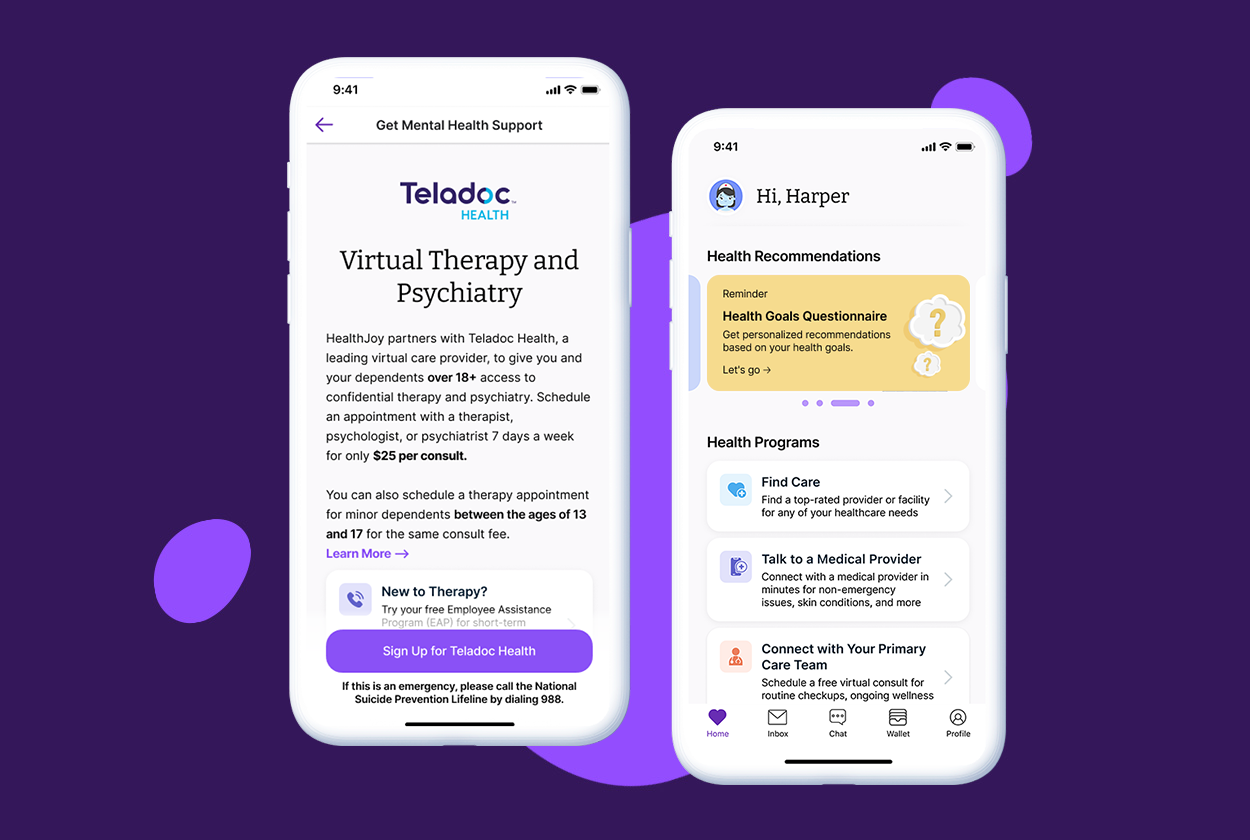

Behavioral Health

Foster a mentally healthy workplace

EAP

Supporting holistic wellbeing

Virtual MSK Care

Reimagining musculoskeletal care

Virtual Primary Care

Powered by smart navigation

Surgery Centers of Excellence

Best-in-class surgical outcomes

Virtual Urgent Care

Immediate care, any hour of the day

Chronic Care

A new approach to chronic care

Integrations

Flexible to any strategy

Everyone has heard the statement “artificial intelligence is going to change healthcare.” Mostly they reference how Watson is being used for healthcare diagnoses or tout a broad industry movement with little detail.At HealthJoy, AI is one of our core technical components that makes the lives of our members easier throughout their healthcare experience. Earlier this year, we had the privilege of hosting two MIT computer science and engineering students at HealthJoy for our internship program. They both specialize in artificial intelligence and neural network technologies at MIT, with prior experience at NASA, IBM Watson research lab, and more. We were extremely excited to have them on board and had the perfect project in store:

JOY Vision was an objective that seemed to get consistently delayed due to more immediate needs. We wanted a member to take a photo of their insurance membership card and have the different components automatically recognized and entered into our system, rather than having to wait for our team to manually input the information. This project easily falls into our mission of making healthcare as easy as possible (and leveraging A.I. to do so). It might only save a few seconds during the onboarding process but we felt it was important and would also increase data collection rates.

The goal of this project might sound easy – Apple has been doing it for years in their wallet software – but there are a lot of factors that make it particularly challenging in healthcare. On the one hand, credit cards are standardized in the information they present and their formatting. Visa, Mastercard, and American Express all have strict design guidelines. Card numbers are required to be 15-16 digits long starting with specific numbers, and security codes are exactly 3-4 digits long. Issuers can’t just add additional products on a credit card with different member numbers because it’s against the guidelines (and for techies, won’t pass the credit card checksum).

On the other hand, the healthcare space is basically the wild west for membership cards. The Blue Cross Blue Shield Association does have some guidelines for their cards, but once you leave the blues, all bets are off. This is especially true when it comes to TPA’s that might bundle 10 different products and include them all in a single card to save on printing. Here are the variations that we found during our early research on over 10,000 different member cards:

Our interns started the project by using a wide variety of different open source and cloud-based OCR packages on the member cards to see their performance. We started by testing Google’s Tesseract, CuneiForm Cognitive OpenOCR, GOCR, Ocrad and a few others but none solved our problem on their own. Due to all the variations of membership cards, these programs recognized less than half of the member numbers, for example. We needed a custom-engineered solution that could learn over time.

A necessary evil in the world of data science is cleaning up data. We had already built an extensive set of tools for cleaning chat data but hadn’t worked much with images. We needed to make it easier for the system to “see” characters in an image. Further, we had to let members know when images had poor lighting or alignment. We figured out how to manipulate the image to find the borders of the card and normalize contrast and coloring to “see” the image better. (That being said, with our neural network choice, the amount of pre-processing is actually much less than other computer vision methods)

Typical deep learning techniques for computer vision involve Convolutional Neural Networks (CNN, or ConvNet). This category of neural networks has proven effective in image classification and recognition. Google uses them to identify objects, traffic signs and faces in maps. You’ve probably even trained Google’s algorithm when using some of their services. Ever been required to identify a sign or store to prove you’re human? That’s you training a CNN. Since a CNN classifies only a single item, we needed to split up all of the characters on a c ard and process them individually. We then could take each letter/digit within a box and break them into separate images. Each letter/digit image could then be fed into the CNN to compare the pixel shading with other similar numbers and letters.

Once we addressed character recognition, our next problem was finding the data we wanted and ignoring the rest. We wanted the member’s ID number, group ID and prescription drug BIN number if included on the membership card. A BIN number is the only number that has set industry standards (# of digits), so we had to try a few automated ways to recognize the other numbers on the card. After a few days of trying different methods without a breakthrough, one of the interns found inspiration in Snapchat. Snapchat filters are fun filters that add images in real-time that track to your face.

When you start to use a filter, you must first select your face on the screen. Only then does Snapchat layer on something like a warthog’s snout or bunny ears. If we required the user to quickly tap on the two or three numbers we were looking for, we could take care of figuring out the data. The user wouldn’t be required to select the data in any particular order; just three taps and they’re done. On the backend, bounded boxes are created around areas the user highlights with their finger.

The coordinates (in respect to the whole card) are saved and fed into an Object Detection algorithm. The algorithm compares the location and size of each box to other insurance cards in our database. Once we have the exact location of the particular IDs, we then can easily run those characters through our model and – Voila! We now have an automated way for storing IDs with JOY Vision.

We would like to thank our MIT interns Haripriya and Katharina for doing an amazing job on JOY Vision. Their work will be integrated into our app over the next few months to streamline the onboarding process. It’s this type of innovative thinking and commitment to our mission that continues to make healthcare simpler, easier, and less expensive for our members.

2025 is off to an incredible start at HealthJoy, with a series of exciting product updates that are already making an impact!

As we enter the final quarter of the year, I thought it would be a great time to check in and provide updates on all the exciting initiatives the...

We’re very excited to announce that HealthJoy has closed a $3 Million Series A round of funding in partnership with investors that have both a...